Edmund Jorgensen wrote this great post that laid out a scenario in which an engineer approaches an engineering manager and says the following:

'Look,' says Cindy, the first engineer, 'I know that the CEO is breathing down our neck to finish the new Facebook for Cats integration, but we've got to clear some time to work on automating database migrations. I’m the only one who knows enough to apply them to the prod DB, and I’m getting tired of spending half an hour every morning rolling out everyone else’s changes. So can we push a feature or two back and squeeze that in?'

Here's what Edmund said the typical, business-focused engineering manager would reply with:

'Cindy, I'm sorry to hear that you're getting bored doing so much production DB work, but realistically it would take you at least 40 hours of work to write, test, and deploy a migration utility, right? So if you're spending a half hour a day on migrations, it would be 80 working days before we saw a return on our investment—that's like 4 months, and that's just too long for me to sanction—precisely because you're such a valuable member of the team, and I can't spare so much of your time right now away from our feature backlog. We can touch base if the migration workload increases too much, OK? Until then, I have to ask you to put your head down and be a team player.'

Through the lens of an engineering manager who is solely focused on completing their current & near-term projects on time, that is a respectable response. However, here's what Edmund said you should have thought after hearing Cindy's request:

DANGER, WILL ROBINSON — a queue is forming in your engineering org. Cindy has become a bottleneck for changes making their way to production, and a queue of people trying to make those changes is forming behind her. Queues are one of the clearest signals of developing latency. What happens if Cindy is out for a few days on (gasp) vacation? No changes will go out ... You should probably allow Cindy to do her migration project and you should definitely explain to her why you're allowing it.

Edmund is uncovering one of an engineering team leader's responsibilities that is a source of great leverage: identifying opportunities that create greater long-term throughput for the team.

To identify a leverage opportunity is one thing, getting it prioritized by your business owners is another. For an ecommerce company, the business owners are typically the product & marketing teams. In my experience, getting your business owners to support efforts that are unrelated to their KPIs and don't impact the customer requires trust. Trust that the ROI will be worth it. Trust that you're not padding time estimations. Trust that you're not exaggerating the win for these efforts. The only way to gain this trust is by earning it. Fight for your opportunities and let the results speak for themselves.

#1: Buying back your backlog

Take a look at your past 5 sprints. Put each developer story into a spreadsheet and give each story a weight (ex: how many days it took to complete the story). Now categorize each story based on the type of activity (ex: feature development, support, etc.). This should give you a sense of how your engineering resources are currently being allocated.

Now ask yourself this question: what activities can be removed from your backlog by a paid service?

We did this exercise almost two years ago and discovered that we were spending 10-15% of our engineering resources pulling metrics and building/updating dashboards. If we could offload our business intelligence needs to a paid service, we would free up that 10-15%. On a 10 person team, that's equivalent to adding another engineer to the backlog. Consider the costs of an engineer in terms of recruiting, ramping up, and salary. More often than not, a service is more cost-effective than having an engineer perform the tasks internally.

The 'buy or build' question can be difficult as there are many factors that play into that decision, but for business intelligence, it turned out to be a no-brainer. After doing a BI tool bake-off, we decided on Looker and never went back.

#2 Distributed responsibilities

Looking for bottlenecks within your organization is a great way to discover leverage opportunities. Bottlenecks typically occur around someone in the organization or around a particular process. For us, one of our process bottlenecks used to be the testing phase of a project.

We work in two week sprints and used to dedicate the second half of the second week of the sprint for testing. This means 25% of our engineering bandwidth was allocated to non-backlog development. This may seem excessive, but even though our code is well tested in terms of unit/integration tests, we still like to test every flow to ensure the UX is right before shipping a new feature.

While testing is very important to us, it should not consume 25% of our bandwidth. During one of our sprint retrospectives, we were thinking of ways to make our testing process more streamlined and we realized we were under utilizing customers who know our product best - the rest of the company. This is what led to what we call 'Mob Testing'.

Every sprint, we fire up a new testing document that lists every flow. Logged-in, logged-out, desktop web, mobile web, etc. (even Internet Explorer). The most important column in this doc is titled 'Tested By'. Every person that tests commits to a flow. Testing is a team effort and we want to hold everyone accountable to doing their part in it. If someone doesn't test, we'll know.

We've found the most efficient way to approach mob testing is to roll it out in phases. If you release it to everyone at once, you'll be overwhelmed with feedback. This is why the engineers and stakeholders test first since they know the project's best. Once they give it their blessing, we roll the testing out to the product & marketing teams. After we tweak/fix everything from the first two phases of testing, we roll it out to the rest of the company. This final phase ensures we didn't introduce any new bugs while incorporating feedback from the first two phases.

It took us a while to figure out how to efficiently collect and take action on the testing feedback, but we were eventually able to improve our testing process from a 3-day activity to 1-day activity after we distributed the testing responsibilities to the entire company.

#3 Automate everything

In Edmund's scenario, Cindy is a highly skilled engineer who is spending development time doing non-development activities (running migrations for other engineers). My suggestion here is to automate everything. The larger your team is, the greater return you will have when you automate.

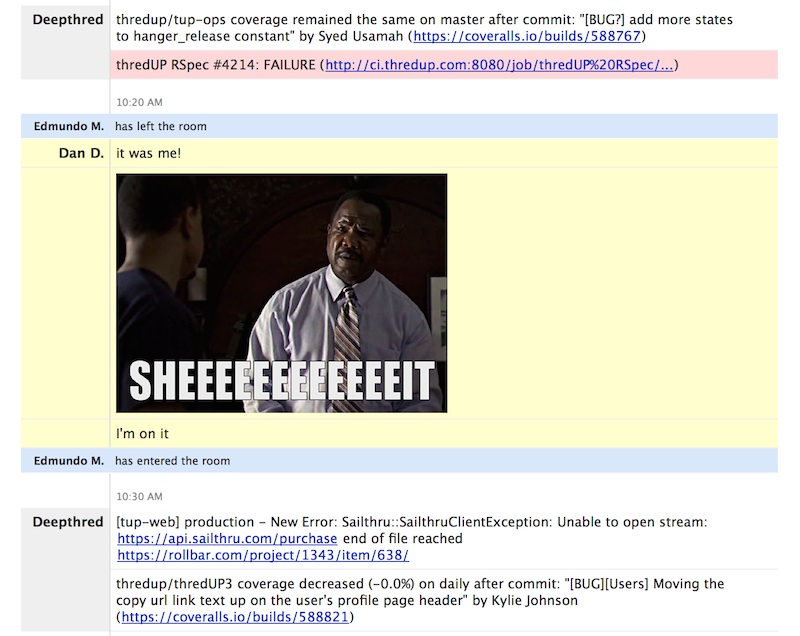

Having a thorough test suite that runs via a CI server after every push to your release branch and pipes the results into your dev chat room should be a given. The question is: what else can be automated after every push of code?

For one, we have a rake task that our CI server runs before the test suite even runs to make sure we didn't commit anything that shouldn't be in production. If you left a debugger(); or console.log(); statement in your JS, our rake task will fail the test suite. We've added similar checks for every language we use as we believe breaking the build as frequently as possible - with clear error messages - is a critical aspect to scaling the team. As engineers, we don't try to break the build, but every time one of our checks catches an error, it saves us from learning about it later and having to drop what we're doing to deploy a fix, which can add up over time.

While our CI server is running, Code Climate is analyzing the branch that was pushed to. Once it finishes, it will pipe the results into our chat room so we can be notified of any new vulnerabilities or declines in code quality. In the past, code quality was something that was determined during code review. If the reviewers determined something needed to be re-factored, the author would go back to coding, re-factor, and then the code review session would start again. With Code Climate, the author will know ahead of time that they should re-factor or re-visit their implementation and it saves everyone a meeting.

To reference Edmund's scenario again, Cindy was a bottleneck for engineers to get their code to production. At thredUP, everyone can deploy to production and as a result, our production application is deployed to multiple times per day by a variety of engineers ranging in experience. Everyone can also deploy to our internal staging servers, which means we can have 5 different projects being tested in staging environments at the same time without having to worry about getting production data setup manually to test each project.

Lastly, try to think of improvements that have leverage across multiple teams. For example, every time we receive 5 or more developer customer support (CS) tickets of the same nature, we try to determine whether there's a way to fix the issue at the source to stop the tickets from happening again. If we can't fix the issue at the source, we determine if there's a way we can automate the solution for the CS team. One example of this is when customers forget to apply their coupon code on their order. There's no bug there to fix and we can't retroactively change their payment transactions, but we can build a simple tool that allows our CS team to generate coupons for customers to use on a future order. Adding this tool, which only took a couple of hours to build, saves our engineers a handful of CS tickets every day and allows our CS team to resolve these situations with our customers faster.

Opportunities like this exist everywhere for just about every team and it's your job to identify, prioritize, and fight for them. If you have some great tips you would like to share, I'd love to hear about them.